My goal here is not just to relate my experience with setting up my home server, but also to make a (hopefully) approachable guide to doing the same for the only semi-technical. The first half will serve as mostly a story, but also a primer for some of the terminology in the space and many of the tools that will be invaluable. If you don't know any terms or technologies, I would recommend following the links as you go, and reading for a bit. It will help you learn, which in the end is the best part of setting up a home lab :).

1. Plex

The beginning of my foray into home lab was with Plex. If you are unaware, Plex is a way to host your own content and allows for remote access to it. It’s targeted at a general audience you really don’t need to be tech savvy at all to get started. All was good until they changed their policies making you have to pay a subscription for the luxury of remote access. This was approximately 2 months after I paid for the iOS remote access feature, which at the time was a single time payment of something like 5-10usd. That was fine, but this subscription thing in my opinion was bullshit, so I looked into alternatives, which is where I discovered Jellyfin.

2. PewDiePie made me Jelly

So I got started with Jellyfin, as typical with open source software, a higher degree of technical knowledge is expected, generally the assumed pathway is to only access via local network. The reason for this is that opening up remote access brings with it security considerations - once accessible via the internet someone (rather someone’s botnet) will try to gain access[1].

Dec 22 00:54:45 pan kernel: [UFW BLOCK] IN=wlan0 OUT= MAC=2c:cf:67:4f:bd:bc:3c:84:6a:c6:3b:38:08:00 SRC=192.168.0.1 DST=192.168.0.64 LEN=374 TOS=0x00 PREC=0x00 TTL=64 ID=30053 >

Dec 22 00:54:25 pan kernel: [UFW BLOCK] IN=wlan0 OUT= MAC=2c:cf:67:4f:bd:bc:3c:84:6a:c6:3b:38:08:00 SRC=192.168.0.1 DST=192.168.0.64 LEN=374 TOS=0x00 PREC=0x00 TTL=64 ID=13702 >

Dec 22 00:54:05 pan kernel: [UFW BLOCK] IN=wlan0 OUT= MAC=2c:cf:67:4f:bd:bc:3c:84:6a:c6:3b:38:08:00 SRC=192.168.0.1 DST=192.168.0.64 LEN=374 TOS=0x00 PREC=0x00 TTL=64 ID=3935 D>

Dec 22 00:53:42 pan kernel: [UFW BLOCK] IN=wlan0 OUT= MAC=01:00:5e:00:00:01:3c:84:6a:c6:3b:38:08:00 SRC=192.168.0.1 DST=224.0.0.1 LEN=32 TOS=0x00 PREC=0x00 TTL=1 ID=33112 DF PR>

Dec 22 00:53:39 pan sshd-session[2569220]: Connection reset by authenticating user root 45.140.17.124 port 41492 [preauth]

Dec 22 00:53:37 pan sshd-session[2569213]: Connection reset by authenticating user root 45.140.17.124 port 22224 [preauth]

Dec 22 00:53:35 pan sshd-session[2569132]: Connection reset by authenticating user root 45.140.17.124 port 22214 [preauth]

Dec 22 00:53:32 pan sshd-session[2569053]: Connection reset by authenticating user root 45.140.17.124 port 22186 [preauth]The above is people trying to gain access to my server via brute-force ssh attempts (before being blocked by Fail2ban). The other tool being used is ufw aka uncomplicated firewall. We will come back to this, its super easy to set up in a very restrictive manner once you have the rest of things set up.

PewdiePie (aka Felix Kjellberg) then released a video about how he had this kickass setup. And I was like, ok, my setup is embarrassing. Time to make it legit.

2.5 Going headless

It was also around this time that I went fully headless on my raspberry pi (using the Raspberry Pi OS Lite image). For whatever reason my startup times were god awful (>2 min) so I made a clean wipe, installing only what I actually needed.

3. Wtf is a reverse proxy

So back to the PewDiePie thing, as mentioned he releases this video (I'm DONE with Google). And as a short aside - shortly before that he released a video about his kick-ass linux rice that is also a great watch, but anyway. He releases this video that inspires me to go further with my homelab. To accomplish this, I'm like ok so how do I connect to each of these services? Do I make like a landing page that somehow swaps between or something? Idk these things. Well I didn't, but after some quick searching or asking chat gippity I decide that I'm going to make each service a different subdomain of my domain(at the time freno.me).All of these subdomains actually point at the same exact thing, but with the payload coming into the server, I get the subdomain that was looked up, and based on that the reverse proxy will then route the request to the relevant service on the machine.

graph TD

A[Request] --> B{DNS lookup}

B -->|My Server| C[nginx]

C --> D[jellyfin]

C --> E[Open WebUI]

C --> F[etc etc]4. Open WebUI oh my!

So at the time I was still very basic with ai use - I had never done any terminal agent work (Claude code/opencode) or used any of the ai editors like cursor, so ask question and then copy&paste was perfectly fine for me.

5. Vaultwarden, File browser

Vaultwarden is a ready to self host implementation of the Bitwarden Client API written in rust(nice). It’s fantastic, easy to setup and secure. File browser is the least exciting thing I setup, it’s just a simple way to remotely store and view any files I want to offload or back up from my devices.

6. Opencode

Opencode is a agentic terminal program, similar to Claude code, but open source and allows for access to any model from any provider, including self hosted ones. I wanted to give this a try, I never really bought in to the vibe coding thing, but I thought I shouldn’t write off a tool just because some people go too far with it, so I gave it a go. Qwen3-coder had been released literally days before, and after some configuration I got it running. And it’s fine. I also tried Claude sonnet 4 and 4.5 and they’re fine. Like, it’s definitely faster than copy and pasting, but I have less time to notice errors it’s made before it moves on to the next logical part of the chain, meaning I spend even more time correcting things.

I then tried infill or FIM[2], which is from what I understand what cursors tab (non agent mode) is like. Also supermaven. Qwen3-coder is also capable of this, and with some basic tweaking, it’s pretty damn good, I get like 1000tokens/s on my 3090(if you don’t know - that’s really fuckin fast for self hosted models). I did a huge amount of tuning of levers to get every ounce of vram loaded - when I’m doing work I typically run the machine headless(this is also my gaming machine). This is the script I use to load the various models at different context sizes (increasing context increases vram usage so I have to reduce how much of th model is run on vram putting it on the system ram which is way way slower).

Shortly before writing this article (technically concurrent with the writing of the article) I even integrated it with the text editor on this site - the one I use for writing this very article and it was surprisingly painless - it ain’t great but it’s kinda cool.

6.5 Need a new auth method

Ok so now what am I going to do? I need a way to make sure only I have access to the infill, and I want it from anywhere and of course there is no web frontend. I now learn about nginx request auth passing. Build a go (golang my beloved) server to validate the private key being sent by the client against the public key living on the server. Works great bing bang boom.

So now the way the setup works is like this:

graph TD

A[Request] --> B{DNS lookup}

B -->|My Server| C[nginx]

C --> D[jellyfin]

C --> E[Open WebUI]

C --> F[LLM direct]

F -->|Preroute| G[Auth service]

G -->|Pass| H[LLM direct]

G -->|Fail| I[401]7. Gitea because Microsoft is speed running reputation destruction

If you’ve been living under a rock and don’t know about Microsoft completely shitting the bed when it comes to it’s consumer facing products let me give you a quick gloss of what the past few years have looked like. Everything is a subscription behind 365, their office subscription suite. No more just buying an word or excel liscense. It’s all subscription based now. And the whole point of that is to get you to pay for a service you don’t want, and then they can just shut it off at any time. Windows at the same time has been pushing their shitty copilot implementation, that literally can’t do the entire pitch and no one wants it.

On top of the entire is has been completely enshittified. Ads in the start menu[3], the file explorer (which was not the best to begin with) is slower now than in windows 10[4], and to fix it they implemented a shotty hack to cache a bunch of shit and slow down startup[5]. Oh yeah and they are blocking every way to create a local account[6] - forcing the need for internet access and to fork more info over to Microsoft of course. It’s funny, the only OS you actually have to pay for is also the only one with fucking ads in it and the only one that requires internet access to set up.

It’s very clear that Microsoft is focused solely on its b2b segment, and trying to milk the consumer for all they’re worth.

So with all of this I want as little contact with the sludge monster that Microsoft now is. My only remaining point of contact is Github[7](oh yeah and LinkedIn which also just feels gross - I completely forgot about it because I try to black it out from my mind). And this one is reaaally hard to ditch completely. Probably going to be impossible for the foreseeable future, but I want to make whatever progress I can.

So with that goal in mind, I looked into self hosted git servers and Gitea seemed good, was mostly painless - had some issues with container write permissions that too long to sort out, but it’s up now and working great.

8. Also consider NextCloud

While I don't personally run it, NextCloud is apparently quite good, I just don't have a need for it, so I cannot comment past that.

Running this yourself (or something similar)

Note: this is written in a way to be followed such that you learn to use these tools. If you want to set and forget you can use this file to setup your system (assuming a debian-based distribution) - this is not recommended.

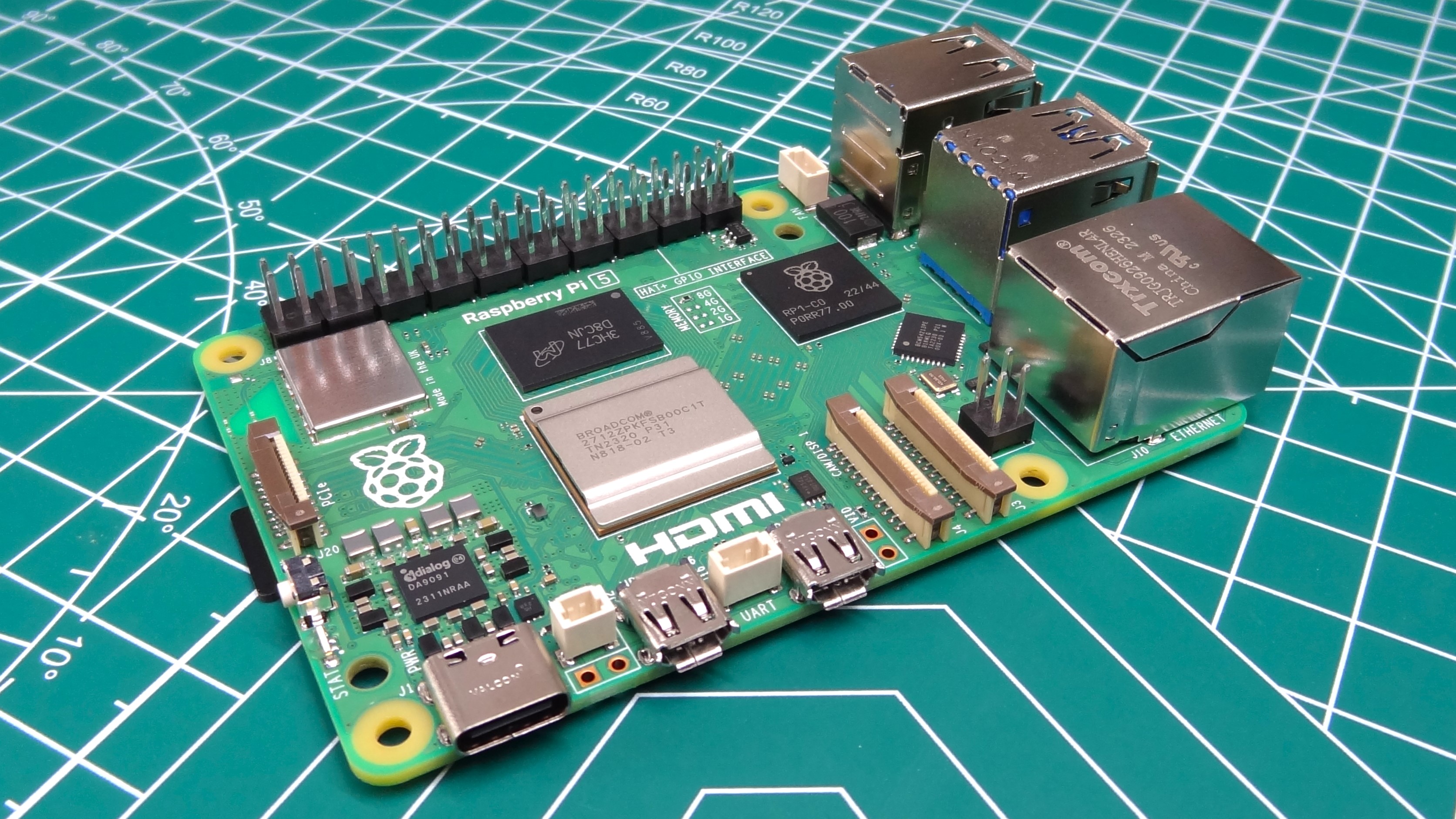

Getting started has gotten a tad more expensive recently due to Memory (RAM) prices skyrocketing. But thats already affects any non-mass market computer(the Apple’s and Samsumgs of the world), and will be affecting those soon. So while Raspberry Pi raises prices, I still think it’s very worthwhile to go with a Pi, it’s a great little machine.

I use the Raspberry PI 5 8gb[8], and it’s got more than enough RAM for all these servers when it’s basically just me using it - if you plan on having multiple people use these services concurrently, or you plan on streaming 4k video you may want to look into a used Mac mini[9], or something like this. In either case, they will outperform a Pi quite considerably. Note that the rest of this article is assuming debian(which raspberry pi’s OS is based on)

I would also highly suggest getting yourself a domain name, this will make it much easier to connect to your machine & services as well as opening the door for added security. It usually costs around $15 a year and is well worth it, especially if you plan on running Vaultwarden or the like, in which case it will be required. You will likely also need to lock down your ip so your domain will know where to actually point, as many ISP’s[10] will dynamically assign your public ip address, a service like tailscale(recommended) or no-ip will accomplish this for you. Additionally, your router probably dynamically assigns local ip addresses to devices too, so log in to your router and provision a specific one for your server.

Once you have your machine and the basics are set up(networking etc.) you will want to set up your SSH keys, which we will get to soon.

If your machine doesn’t have ssh configured:

sudo apt install openssh-server

# Enable and start the SSH service

sudo systemctl enable ssh

sudo systemctl start ssh

# Check if SSH is running

sudo systemctl status sshThe most secure way of doing this, and assuming you will connect to the internet, the way you should do this is to require pubkey authentication[11]. To share, from your client machine, first make sure you have a ssh key pair:

ls -la ~/.ssh/If you see files like id_rsa&id_rsa.pub or id_ed25519&id_ed25519.pub, then you’re set, if you don’t see files like that then you need to make them:

ssh-keygen -t rsa -b 4096 -C "<your email>"Now that you have a key pair you need to share it to your server, this is easy with ssh-copy-id

ssh-copy-id <username>@<server_ip>Once you have done this, we can proceed with locking down your server a bit. Here is a script that can handle the change:

#!/bin/bash

# Script to disable password authentication and enable key-based authentication for SSH

echo "Configuring SSH to require key authentication and disable password authentication..."

# Backup original config file

sudo cp /etc/ssh/sshd_config /etc/ssh/sshd_config.backup.$(date +%Y%m%d_%H%M%S)

# Use sed to modify the configuration file directly

sudo sed -i 's/^#*PasswordAuthentication.*/PasswordAuthentication no/' /etc/ssh/sshd_config

sudo sed -i 's/^#*PubkeyAuthentication.*/PubkeyAuthentication yes/' /etc/ssh/sshd_config

sudo sed -i 's/^#*PermitRootLogin.*/PermitRootLogin no/' /etc/ssh/sshd_config

# Add the lines if they don't exist

grep -q "^PasswordAuthentication" /etc/ssh/sshd_config || echo "PasswordAuthentication no" | sudo tee -a /etc/ssh/sshd_config

grep -q "^PubkeyAuthentication" /etc/ssh/sshd_config || echo "PubkeyAuthentication yes" | sudo tee -a /etc/ssh/sshd_config

grep -q "^PermitRootLogin" /etc/ssh/sshd_config || echo "PermitRootLogin no" | sudo tee -a /etc/ssh/sshd_config

# Verify the changes

echo "Updated SSH configuration:"

grep -E "(PasswordAuthentication|PubkeyAuthentication|PermitRootLogin)" /etc/ssh/sshd_config

# Test SSH configuration syntax

echo "Testing SSH configuration..."

if sudo sshd -t; then

echo "SSH configuration is valid"

# Restart SSH service

echo "Restarting SSH service..."

sudo systemctl restart sshd

echo "SSH configuration updated successfully!"

else

echo "SSH configuration test failed. Reverting changes..."

sudo cp /etc/ssh/sshd_config.backup.$(date +%Y%m%d_%H%M%S) /etc/ssh/sshd_config

sudo systemctl restart sshd

exit 1

fiNext on the security side of things we will add UFW(or uncomplicated firewall).

sudo apt install ufw

sudo ufw default deny incoming

sudo ufw default allow outgoing

sudo ufw allow 22/tcp # If you are going to change the default port for ssh, make sure this matches

sudo ufw allow 80/tcp

sudo ufw allow 443/tcp

sudo ufw enableNext, we will need to add docker[12], you can run these services without, but I generally find them more stable and more easily managed when run in a container.

You can also use the following to just add docker to the cli

sudo apt-get update

sudo apt-get install ca-certificates curl

sudo install -m 0755 -d /etc/apt/keyrings

sudo curl -fsSL https://download.docker.com/linux/debian/gpg -o /etc/apt/keyrings/docker.asc

sudo chmod a+r /etc/apt/keyrings/docker.asc

# Add the repository to Apt sources:

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/debian \

$(. /etc/os-release && echo "$VERSION_CODENAME") stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get update

sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-pluginOk so at this point we can start setting up services. Let’s start with Vaultwarden as it’s a huge W to have a trusted password manager, it will seriously improve your security and reduce headaches so here we go.

#!/bin/bash

DOCKER_PATH=$(which docker)

$DOCKER_PATH start vaultwarden || \

$DOCKER_PATH run \

--name vaultwarden \

-v /srv/vaultwarden/data:/data \

-p 127.0.0.1:8081:80 \ # this controls what port you will connect on, I will use this same port (8081) later in nginx. If you change this it will need to change there

-e DOMAIN=https://<your_domain> \ # note the https, valutwarden will not work over http, you need the cert, we will go over this.

# -e SIGNUPS_ALLOWED=false \ # once you have everyone you intend on using this on, uncomment this line

--restart unless-stopped \

vaultwarden/server:latestSome may prefer to use docker compose for these services, I do for one, but for most the config is pretty simple so I just slap it in a bash script.

Now, we will repeat this next process for each additional service. First we need to add a some DNS records. So whatever your registrar, find where you can edit DNS records and add the following:

host | type | TTL | data |

|---|---|---|---|

vault | CNAME | 4hrs | (depends) |

Note: for the host, we will use vault, but it can be whatever you want, we will assume vault moving forward

Note 2: for data, this depends on how you set up you ip stabilization, this needs to point at your server, so your server's ip address, or whatever is pointing at your server's ip address.

Now, let's start getting nginx set up, you can find install instructions here. We will be adding one service at a time to keep things from being overwhelming (and so we can add certs as we go).

Now lets make a new sites-available for nginx:

sudo touch /etc/nginx/sites-available/<domain> # or whatever you want to call it

sudo vim /etc/nginx/sites-available/<domain> # you can use what editor you will, i use vim, nano is typically preinstalledNow add the following:

# this is where we get the incoming request

server {

listen 80;

server_name vault.<your_domain>; #these must match. Example: vw.freno.me (what I use)

return 301 https://$host$request_uri;

}

# this is where the request will be routed

server {

listen 443 ssl;

listen [::]:443 ssl;

http2 on;

server_name vault.<your_domain>; #these must match. Example: vw.freno.me (what I use)

location / {

proxy_pass http://127.0.0.1:8081;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

location /notifications/hub {

proxy_pass http://127.0.0.1:8081;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

}Now test, and reload:

sudo nginx -t

sudo systemctl reload nginxNow, we can get certs with certbot, to install:

sudo apt install certbot python3-certbot-nginxAnd to use it:

sudo certbot --nginx -d vault.<your_domain>This will fail if you do not have the DNS records set properly, as it will connect to your server to verify. Once connected and verified, certs will be made along with changes to your sites-available - these are just paths to where your certs are stored, and comments indicating that it is managed by certbot, it should go without saying, but don't modify those new lines. Cerbot will also have set a renewal timer, you can verify that with

sudo systemctl status certbot.timerNow, you should go ahead and connect to your service! In your browser going to vault.<your_domain> and it should be live!

To have the service run on startup we will need a service file. Save this as a script update the SCRIPT_PATH and USER, give it executable permission ‘chmod +x <script_filename>’ and then run it with elevated permissions (sudo).

#!/bin/bash

# Script to create Vaultwarden service file

SERVICE_FILE="/etc/systemd/system/vaultwarden.service"

SCRIPT_PATH= # add the absolute path to the previously made script that runs the docker container

# Check if running as root

if [ "$EUID" -ne 0 ]; then

echo "Please run as root (sudo)"

exit 1

fi

# Create the service file with the specified contents

cat > "$SERVICE_FILE" << EOF

[Unit]

Description=Vaultwarden Docker Container

# This service requires the Docker service and will start after it and the network are ready.

Requires=docker.service

After=network.target docker.service

[Service]

Type=simple

User= # update this with your user account

ExecStart=$SCRIPT_PATH

Restart=on-failure

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOFYou can then enable the service to start on launch, and start it now.

sudo systemctl enable vaultwarden.service

sudo systemctl start vaultwarden.serviceFor each of the following, remember to update your DNS records in the same pattern as above, and run certbot for the new service(just replace ‘vault’ with the new domain) and create a service (and enable it) like the above.

Let's quickly go over the other simple services:

Open WebUI

#!/bin/bash

DOCKER=$(which docker)

# this (Watchtower) will watch for new versions and automatically update. It is not required

if ! $DOCKER ps -q -f name=watchtower | grep -q .; then

$DOCKER run -d \

--name watchtower \

--restart unless-stopped \

-v /var/run/docker.sock:/var/run/docker.sock \

containrrr/watchtower \

--interval 300 \

--cleanup

fi

if ! $DOCKER ps -q -f name=chat | grep -q .; then

$DOCKER run -d \

--name chat \

--restart unless-stopped \

-p 127.0.0.1:8082:8080 \

-v open-webui:/app/backend/data \

ghcr.io/open-webui/open-webui:main

fiFile browser:

#!/bin/bash

DOCKER_PATH=$(which docker)

$DOCKER_PATH start filebrowser || \

$DOCKER_PATH run -d \

--name filebrowser \

-p 127.0.0.1:8083:80 \

-v /srv/filebrowser/config:/config \

-v /<wherever_you_want_to_store>/filebrowser/:/srv \

-u 1000:1000 \

--restart unless-stopped \

filebrowser/filebrowserGitea is more complicated and for it I run it with docker compose.

#docker-compose.yml

networks:

gitea:

external: false

services:

server:

image: docker.gitea.com/gitea:1.25.2

container_name: gitea

environment:

- USER_UID=1000

- USER_GID=1000

- GITEA__database__DB_TYPE=postgres

- GITEA__database__HOST=db:5432

- GITEA__database__NAME=gitea

- GITEA__database__USER=gitea

- GITEA__database__PASSWD=gitea

restart: always

networks:

- gitea

volumes:

- <storage_path>:/data # wherever you want to store data the git data

- /etc/timezone:/etc/timezone:ro

- /etc/localtime:/etc/localtime:ro

ports:

- "8085:3000"

- "2222:22" # to map ssh to different port, not required

depends_on:

- db

db:

image: docker.io/library/postgres:14

restart: always

environment:

- POSTGRES_USER=gitea

- POSTGRES_PASSWORD=gitea

- POSTGRES_DB=gitea

networks:

- gitea

volumes:

- <storage_path>:/var/lib/postgresql/data # wherever you want to store user dataNote: Both storage paths above will require the same permissions as the user running the service, so probably easiest to put in the /home/<main_user>/ directory. Additionally, the following assumes that they are in the same directory.

Gitea backup:

#!/bin/bash

BACKUP_DIR= # where ever you want it

DATE=$(date +%Y%m%d-%H%M%S)

GITEA_CONTAINER="gitea" # Your container name from docker-compose if you changed it

COMPOSE_DIR= #absolute path to where your docker-compose.yml is stored

# Create backup directory

mkdir -p "${BACKUP_DIR}"

cd "${COMPOSE_DIR}"

echo "Creating Gitea backup..."

# Create dump as git user in /tmp directory

docker exec -u git -w /tmp "${GITEA_CONTAINER}" /usr/local/bin/gitea dump -c /data/gitea/conf/app.ini

# Get the actual dump filename

DUMP_FILENAME=$(docker exec "${GITEA_CONTAINER}" sh -c 'ls -t /tmp/gitea-dump-*.zip 2>/dev/null | head -1')

if [ -z "$DUMP_FILENAME" ]; then

echo "Error: Backup file not created!"

exit 1

fi

echo "Copying backup to host..."

docker cp "${GITEA_CONTAINER}:${DUMP_FILENAME}" "${BACKUP_DIR}/gitea-backup-${DATE}.zip"

# Clean up the dump file in the container

echo "Cleaning up container..."

docker exec "${GITEA_CONTAINER}" rm -f "${DUMP_FILENAME}"

# Keep only last 7 backups

echo "Cleaning old backups..."

cd "${BACKUP_DIR}"

ls -t gitea-backup-*.zip 2>/dev/null | tail -n +8 | xargs -r rm -f

echo ""

echo "✓ Backup completed: ${BACKUP_DIR}/gitea-backup-${DATE}.zip"

du -h "${BACKUP_DIR}/gitea-backup-${DATE}.zip"

echo ""

echo "Recent backups:"

ls -lht "${BACKUP_DIR}"/gitea-backup-*.zip | head -5Here are service files for gitea:

# gitea.service

[Unit]

Description=Gitea Docker Compose

After=network.target docker.service

Requires=docker.service

[Service]

Type=oneshot

RemainAfterExit=yes

User= #fill this

WorkingDirectory= #fill this

ExecStart=/usr/bin/docker-compose up -d

ExecStop=/usr/bin/docker-compose down

[Install]

WantedBy=multi-user.target#gitea-backup.service

[Unit]

Description=Gitea Backup Service

[Service]

Type=oneshot

ExecStart= # path to the backup script

User= #fill this

[Install]

WantedBy=multi-user.target#gitea-backup.timer

[Unit]

Description=Run Gitea backup every 12 hours

[Timer]

OnBootSec=10min

OnCalendar=*-*-* 00,12:00:00

Persistent=true

AccuracySec=1min

[Install]

WantedBy=timers.targetOk, so now we get to the complicated one. This can be used to self host a LLM and access it directly with something like OpenCode, or infill services. First, we will need a way to make sure that you, and only you will have access to it. As with any API keys, keep it secret, keep it safe.

mkdir ~/infill-keys && cd ~/infill-keys

openssl genpkey -algorithm ED25519 -out client_private.pem

# Extract public key

openssl pkey -in client_private.pem -pubout -out client_public.pemThe above makes a directory where we will store some source keys, we will use these to make a json-web-tokens that can be quickly swapped out and are good to use in https requests.

# within the above made dir

mkdir jwt_gen && mv client_private.pem jwt_gen/ && cd jwt_gen && go mod init jwt_gen && touch main.goAdd the following code to main.go

package main

import (

"crypto/ed25519"

"crypto/x509"

"encoding/pem"

"fmt"

"log"

"os"

"time"

"github.com/golang-jwt/jwt/v4"

)

// loadEd25519PrivateKey reads a PEM file that contains an Ed25519 private key.

func loadEd25519PrivateKey(path string) (ed25519.PrivateKey, error) {

b, err := os.ReadFile(path)

if err != nil {

return nil, fmt.Errorf("read key file: %w", err)

}

block, _ := pem.Decode(b)

if block == nil {

return nil, fmt.Errorf("failed to PEM‑decode key")

}

// The key can be PKCS#8 or just raw 32‑byte Ed25519

// x509.ParsePKCS8PrivateKey will handle the most common format

key, err := x509.ParsePKCS8PrivateKey(block.Bytes)

if err != nil {

// try raw 32‑byte format

if len(block.Bytes) == ed25519.PrivateKeySize {

return ed25519.PrivateKey(block.Bytes), nil

}

return nil, fmt.Errorf("parse PKCS#8 key: %w", err)

}

priv, ok := key.(ed25519.PrivateKey)

if !ok {

return nil, fmt.Errorf("key is not Ed25519")

}

return priv, nil

}

func main() {

priv, err := loadEd25519PrivateKey("client_private.pem") // path to your PRIVATE key

if err != nil {

log.Fatalf("could not load private key: %v", err)

}

// Build a JWT with whatever claims you want.

// NOTE: no "exp" claim – the token will never expire.

claims := jwt.MapClaims{

"iss": "llama-direct", // e.g. your service name

"sub": "<fill>", // subject / principal

"role": "admin", // arbitrary data

"iat": time.Now().Unix(), // issued at (optional)

}

token := jwt.NewWithClaims(jwt.SigningMethodEdDSA, claims)

// Sign the token with the private key

signed, err := token.SignedString(priv)

if err != nil {

log.Fatalf("could not sign token: %v", err)

}

fmt.Println(signed)

}Then we will build and run it (or you can just run it without compiling):

go build && ./jwt-genNext, share your public key to your server:

cd ~/infill-keys && scp ./client_public.pem <host_name>@<ip>:/etc/auth/client_public.pem # I suggest storing it here, as the following expects it there, you can change that howeverNow, this simple go server will validate your key, you can build this on client then scp it to your server, however you see fit, first (make a new dir and cp):

go mod init nginx_auth_server && touch main.goand in the main.go

package main

import (

"crypto/ed25519"

"crypto/x509"

"encoding/pem"

"errors"

"fmt"

"log"

"net/http"

"os"

"strings"

"github.com/golang-jwt/jwt/v4"

)

var (

pubKey ed25519.PublicKey

logger *log.Logger

)

func loadPublicKey(path string) (ed25519.PublicKey, error) {

raw, err := os.ReadFile(path)

if err != nil {

return nil, fmt.Errorf("read %s: %w", path, err)

}

block, _ := pem.Decode(raw)

if block == nil { // maybe raw DER

if len(raw) == ed25519.PublicKeySize {

return ed25519.PublicKey(raw), nil

}

return nil, fmt.Errorf("key is not PEM-encoded and is not 32 bytes")

}

pub, err := x509.ParsePKIXPublicKey(block.Bytes)

if err != nil {

return nil, fmt.Errorf("parse PKIX: %w", err)

}

edPub, ok := pub.(ed25519.PublicKey)

if !ok {

return nil, fmt.Errorf("not an Ed25519 key")

}

return edPub, nil

}

func parseAndValidate(jwtString string) (map[string]interface{}, error) {

parsed, err := jwt.Parse(jwtString, func(t *jwt.Token) (interface{}, error) {

if t.Method.Alg() != "EdDSA" {

return nil, errors.New("wrong alg")

}

return pubKey, nil

})

if err != nil {

return nil, err

}

claims, ok := parsed.Claims.(jwt.MapClaims)

if !ok {

return nil, errors.New("claims are not MapClaims")

}

return map[string]interface{}(claims), nil

}

func handler(w http.ResponseWriter, r *http.Request) {

// logRequest(r) // Commented out

auth := r.Header.Get("Authorization")

if auth == "" {

// logger.Printf("⚠️ no token received")

http.Error(w, "invalid token", http.StatusUnauthorized)

return

}

tokenStr := strings.TrimSpace(auth[len("Bearer "):])

_, err := parseAndValidate(tokenStr)

if err != nil {

// logger.Printf("⚠️ token rejected: %v", err)

http.Error(w, "invalid token", http.StatusUnauthorized)

return

}

// logger.Printf("Claims: %+v", claims)

w.WriteHeader(http.StatusOK)

}

func main() {

f, err := os.OpenFile(

"auth_server.log",

os.O_CREATE|os.O_WRONLY|os.O_APPEND, 0640)

if err != nil {

log.Fatalf("could not open audit log file: %v", err)

}

defer f.Close()

logger = log.New(f, "", log.LstdFlags|log.Lshortfile)

var errLoad error

pubKey, errLoad = loadPublicKey("/etc/auth/client_public.pem")

if errLoad != nil {

logger.Fatalf("could not load public key: %v", errLoad)

}

http.HandleFunc("/", handler)

addr := ":9000"

logger.Printf("Starting auth server on %s", addr)

if err := http.ListenAndServe(addr, nil); err != nil {

logger.Fatalf("ListenAndServe: %v", err)

}

}then

go mod tidy && go buildYou will notice the build binary, nginx_auth_server, we will run this via a service file.

[Unit]

Description=Auth Endpoint

After=network.target

[Service]

Type=simple

User=mike

WorkingDirectory= # directory holding above files

ExecStart= # newly made binary

Restart=on-failure

RestartSec=10s

# Send stdout/stderr straight to journalctl - allows you to easily inspect logs later

StandardOutput=journal

StandardError=journal

[Install]

WantedBy=multi-user.targetThis is how I utilize the above (nginx)

server {

listen 443 ssl;

listen [::]:443 ssl;

http2 on;

server_name #

client_max_body_size 10G;

proxy_socket_keepalive on;

location / {

if ($request_method = 'OPTIONS') {

add_header 'Access-Control-Allow-Origin' '$http_origin' always;

add_header 'Access-Control-Allow-Methods' 'POST, OPTIONS' always;

add_header 'Access-Control-Allow-Headers' 'Authorization, Content-Type' always;

add_header 'Access-Control-Allow-Credentials' 'true' always;

add_header 'Access-Control-Max-Age' 86400 always;

return 204;

}

auth_request /auth;

auth_request_set $auth_status $upstream_status;

proxy_hide_header 'Access-Control-Allow-Origin';

proxy_hide_header 'Access-Control-Allow-Credentials';

add_header 'Access-Control-Allow-Origin' '$http_origin' always;

add_header 'Access-Control-Allow-Credentials' 'true' always;

proxy_pass # where llamacpp is running on local network

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_read_timeout 1800;

proxy_connect_timeout 1800;

proxy_send_timeout 1800;

send_timeout 1800;

proxy_buffering off;

proxy_request_buffering off;

}

location = /auth {

internal;

proxy_pass http://127.0.0.1:9000;

proxy_set_header X-Original-URI $request_uri;

proxy_pass_request_body off;

proxy_set_header Content-Length "";

proxy_set_header Authorization $http_authorization;

}

}Now, to actually utilize the above, whenever you make a request you will need to provide the private key as a BEARER token. Here is how it would be done with opencode (simplified):

# opencode.jsonc

{

"$schema": "https://opencode.ai/config.json",

"provider": {

"Whatever you want": {

"npm": "@ai-sdk/openai-compatible",

"name": "Whatever you want",

"options": {

"baseURL": "https://infill.freno.me/v1",

"apiKey": "{file:~/.config/opencode/private.key}", # path to your key

},

"models": {

}

}

}

}Here is how I use it in neovim[13] with llama.vim (using lazy).

require("lazy").setup({

{

"ggml-org/llama.vim",

init = function()

local keyFile = io.open("<private_key_path>", "r")

local api_key = "_"

if keyFile then

api_key = keyFile:read("*l")

end

vim.g.llama_config = {

endpoint = "<configured_endpoint>/infill", --- /infill is what is used by llamacpp

api_key = api_key,

keymap_trigger = "<M-Enter>",

keymap_accept_line = "<A-Tab>",

keymap_accept_full = "<S-Tab>",

keymap_accept_word = "<Right>",

stop_strings = {},

n_prefix = 512,

n_suffix = 512,

show_info = 2,

}

end,

} ... }

--- additional config

vim.api.nvim_set_keymap(

"n",

"<leader>it",

":LlamaToggle<CR>",

{ noremap = true, desc = "[i]nfill [t]oggle", silent = true }

)

vim.api.nvim_set_keymap(

"n",

"<leader>ie",

":LlamaEnable<CR>",

{ noremap = true, desc = "[i]nfill [e]nable", silent = true }

)

vim.api.nvim_set_keymap(

"n",

"<leader>id",

":LlamaDisable<CR>",

{ noremap = true, desc = "[i]nfill [d]isable", silent = true }

)And that's basically it! I actually also even use the infill service for the text editor on this very site, write up here!

📌 References Section

Notes & References

[1] This is probably the most certain thing about the internet - if it's connected it's vulnerable

[2] Fill In (the) Middle - AI that inserts its response within the text. Kinda like a fancy autocomplete.

[3] Currently you can disable these, probably wont be long before that 'feature' is lost.

[4] https://www.techspot.com/news/110373-microsoft-finally-admits-file-explorer-slow-now-testing.html

[5] https://www.theregister.com/2025/11/25/microsoft_trying_preloading_to_solve/

[7] With the exception of my dual boot workstation/gaming pc - I occasionally (like a once every few months) need to go to windows land because some installer isn’t running with wine.

[8] It's gotten much more difficult to decide what to run due to rising RAM prices, solo would still recommend https://www.raspberrypi.com/products/raspberry-pi-5/?variant=raspberry-pi-5-8gb 16gb pi pricing is ehhhhh, seems like you should go to the later mentioned options for much faster cpu at that price.

[9] Just make sure you go with an M-series Mac, it will blow the doors of the old intel ones. Additionally, you will need a hdmi dummy plug to run headless.

[10] Internet Service Provider

[11] As opposed to password credentials, a public key is shared to the server, this is generated from a private key stored on the client

[12] Docker is a simple to use containerization, which is basically an operating system in a box that’s reproducible and contained